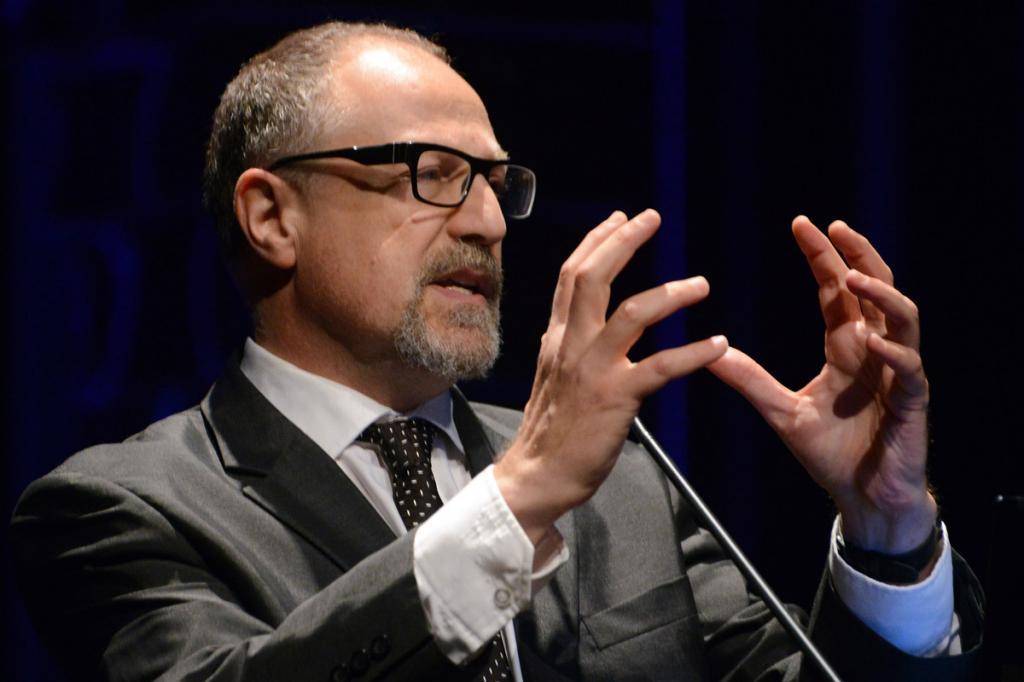

Luis Lamb

Federal University of Rio Grande do Sul (UFRGS), Brazil

On the Evolution and Contributions of Neurosymbolic AI

Talk outline: Although AI and Machine Learning have significantly impacted science, technology, and economic activities several research questions remain challenging to further advance the agenda of trustworhty AI. Researchers have show that there is a need for AI and machine learning models that soundly integrate logical reasoning and machine learning. Neurosymbolic AI aims to bring together effective machine learning models and the logical essence of reasoning in AI. In recent years, technology companies have organized research groups toward the development of neurosymbolic AI technologies, as contemporary AI systems require sound reasoning and improved explainability. In this presentation, I address how neurosymbolic AI evolved over the last decades. I also address the recent contributions within the field to building richer AI models and technologies and how neurosymbolic AI can contribute to improved AI explainability and trust.

Bio: Luis C. Lamb is a Full Professor at the Federal University of Rio Grande do Sul (UFRGS), Brazil. He holds both the Ph.D. and Diploma in Computer Science from Imperial College London (2000), MSc (1995) and BSc in Computer Science (1992) from UFRGS, Brazil. Lamb researches on Neurosymbolic AI, the integration of learning and reasoning, ethics in AI, and innovation strategies. He co-authored two research monographs in AI: Neural-Symbolic Cognitive Reasoning, with Garcez and Gabbay (Springer, 2009) and Compiled Labelled Deductive Systems (IOP, 2004). His research has led to publications at flagship journals and conferences including AAAI, IJCAI, NIPS, HCOMP, and ICSE. He was co-organizer of two Dagstuhl Seminars on Neurosymbolic AI: Neural-Symbolic Learning and Reasoning (2014) and Human-Like Neural-Symbolic Computing (2017), and several workshops on neurosymbolic learning and reasoning at AAAI, IJCAI, ECAI, and IJCLR. He was an invited speaker at IBM, Samsung, The AI Debate #2, AAAI2021 panel, NeuriIPS and CIKM workshops, and on a large number of neurosymbolic AI, innovation, and technology meetings. Lamb is Former Secretary of Innovation, Science and Technology of the State of Rio Grande do Sul (2019-2022), Vice President of Research (2016-2018) and Dean of the Institute of Informatics (2011-2016) at the Federal University of Rio Grande do Sul, Brazil. He has also been a Visiting Fellow at the MIT Sloan School of Management and Sloan Fellows MBA student at MIT.